Modelling Open or Closed Data Ecosystems

Building a data-sharing ecosystem requires a clear understanding of regulatory implications, governance frameworks, standards, rules, and technology. The Raidiam Trust Platform provides predefined components that help governing organizations effectively model ecosystems—whether open or closed, national or global.

Raidiam’s advisory and implementation services help tailor the ecosystem to meet your specific needs. In this article, explore the key components and critical decisions involved in creating a robust and sustainable data-sharing ecosystem.

If you’re not already in the process of setting up your ecosystem, start by visiting our Get Started Guide.

If you haven’t connected with Raidiam yet, Get in Touch to explore how we can help you build and implement your data-sharing ecosystem.

Key Ecosystem Design Considerations

When designing an ecosystem, it's essential to answer the following questions, as each decision impacts the overall structure and functionality.

Before creating the ecosystem:

After the ecosystem is created:

-

What types of participants will be included in the ecosystem?

-

What Roles and Permissions are available for Organisations and Resources?

What is the Access Model: Open or Closed

When designing an ecosystem using the Raidiam Trust Platform, one of the fundamental decisions administrators face is choosing the Access Model: whether the ecosystem will be Open or Closed.

Open Ecosystems

In an Open Ecosystem, key information about participants and their technical resources—such as authorization servers, APIs, and registered applications—is accessible to the public. This fosters transparency and encourages innovation by enabling third-party developers and organizations to discover and potentially collaborate with ecosystem participants.

However, openness does not equate to lack of control:

-

Controlled Registration: Only entities approved by the ecosystem's governing organization (e.g., trust framework administrators) can join and interact with participants.

-

Access Based on Conformance: Participants must meet the ecosystem’s conformance requirements. For example, organizations may need to demonstrate compliance with specific security profiles, data API standards, or operational requirements. Non-conformance can result in restricted access or disqualification.

-

Data Access for Registered and Accredited Participants: In an Open Ecosystem, certain information, such as details about participants (e.g., Data Providers and Data Receivers) and their technical resources (e.g., APIs and authorization servers), is publicly accessible. This transparency fosters innovation and broad participation by allowing anyone on the internet to discover what resources are available within the ecosystem.

However, access to the actual data offered by Data Providers is strictly controlled. Only organizations that are both accredited and registered within the ecosystem can integrate with the Data Provider's authorization server, obtain access tokens, and call APIs to access sensitive user data (e.g., retrieving account details). Furthermore, such access is typically contingent on obtaining the user’s explicit consent, ensuring compliance with privacy and security standards.

This dual approach—public discovery of resources combined with restricted access to sensitive data—balances openness with trust, enabling secure and compliant data sharing.

Closed Ecosystems

In contrast, a Closed Ecosystem keeps all participant and resource information private. There is no public discovery of resources or participants. Access is tightly controlled and restricted to those who are explicitly invited or approved.

While this model offers enhanced privacy and exclusivity, it also requires robust processes for onboarding and managing participants, as there is no public interface to facilitate discovery or engagement.

Key Considerations for All Models

-

Conformance and Compliance: Open and Closed ecosystems often implement strict conformance requirements to ensure security, interoperability, and trust. Non-compliant participants can be excluded or have their interactions suspended.

-

Governing Body’s Role: Whether the ecosystem is Open or Closed the governing organization is responsible for managing participant registration, compliance monitoring, and enforcement of rules.

-

Use Case Alignment: An Open model may be better suited for ecosystems emphasizing innovation and broad participation, such as Open Banking at a National Level. Closed or Private models are ideal for sensitive, restricted, or collaborative use cases.

This decision shapes the ecosystem's accessibility and operational dynamics, so it must align with the goals of the initiative and its governing structure.

What Security Standards Will Be Applied within Ecosystem

Open Data ecosystems require robust security standards to ensure the integrity, confidentiality, and authenticity of data exchanges between participants. A recommended approach is to adopt the Financial-grade API (FAPI) 2.0 Security Profile and FAPI Message Signing Profile as the foundation for ecosystem security. These profiles provide a comprehensive framework for securing API interactions, ensuring both strong protection and usability.

Financial-grade API (FAPI) 2.0

Developed by the OpenID Foundation, the FAPI 2.0 profiles are designed to deliver high security and interoperability across various use cases. While originally developed for financial APIs, FAPI is broadly applicable to any sector requiring secure and reliable data exchanges. Key features of FAPI 2.0 include:

- High Security: Robust client authentication, token integrity, and message confidentiality ensure the ecosystem's data is protected against modern threats.

- Improved Usability: Simplified implementation reduces integration complexity for participants, enhancing adoption and interoperability.

- Future-proofing: Advanced techniques ensure resilience against evolving security challenges.

Additional Standards for Specific Scenarios

In addition to the FAPI 2.0 Security and Message Signing Profiles, ecosystems can incorporate other OAuth and OpenID Connect standards as needed to support specific user journeys or requirements. For example, standards like Client Initiated Backchannel Authentication (CIBA) can be used to address unique authentication scenarios where direct user interaction is not possible.

By building the ecosystem’s security framework on FAPI 2.0 profiles and extending it with additional standards as required, governing bodies can ensure both strong security and adaptability to diverse participant needs.

While it is possible to set the standards around OAuth 2.0 and FAPI 1.0 Advanced if required, this approach is generally not recommended unless interoperability with existing environments necessitates it. FAPI 1.0 Advanced provides robust security but lacks the usability improvements and future-proof enhancements introduced in FAPI 2.0, which makes it a superior choice for most ecosystems.

What Registration Framework Will Govern Ecosystem

A critical aspect of ecosystem design is deciding the Registration Framework that will govern how applications are registered at the authorization servers that stand in front of the APIs participants expose. This decision directly impacts the scalability, security, and ease of onboarding for new participants and their applications.

The Raidiam Trust Platform supports two primary registration frameworks: OAuth Dynamic Client Registration and OpenID Federation. Each framework has distinct characteristics and use cases.

OpenID Federation

OpenID Federation takes a different approach, leveraging established trust relationships between entities in the ecosystem. Instead of dynamic registration, trust is based on pre-configured agreements and cryptographically signed metadata.

Upon integration into the Ecosystem Trust Framework, Data Receivers are

allocated a unique client_id, bound to their created encryption and transport

certificates. These credentials are utilized to authenticate the Data Receiver’s

requests and secure token issuance via the Data Provider's Authorization Server

Endpoints.

To enable this process, Data Providers must proactively acquire relevant metadata based on their client_id from the Trust Framework, ensuring the registered Data Receiver metadata is always up-to-date.

Key features include:

-

Support for Multi-Sector Federations: OpenID Federation enables the creation of multiple ecosystem schemes, ranging from national to global, allowing different initiatives (federations)—such as Banking, Insurance, and Health—to coexist under a single federation. The architecture is inherently flexible, making it easy to start with one initiative and seamlessly add others over time. This scalability ensures that ecosystems can evolve and expand without requiring major reconfigurations or disruptions to existing setups.

-

Pre-established Trust: Entities within the ecosystem agree on a set of policies and trust each other based on shared metadata.

-

Centralized or Distributed Governance: Federation can support either a centralized model (managed by a single governing body) or a distributed model (managed collaboratively by multiple parties).

-

Enhanced Security: Since trust is established upfront, the risk of unauthorized or malicious registrations is minimized.

The OpenID Federation is the most modern specification that enables organisations to establish trust for data sharing and it solves a couple of issues that OAuth DCR had:

| DCR Issue | Solution |

|---|---|

| Varied registration requests for each Client-Server interaction, causing interoperability issues due to misprocess or miscommunication of these requests | By having Servers to automatically register clients based on the Participant Directory's Entity Statements, the system eliminates individual registration requests, enhancing interoperability. |

| Registration data can often be outdated as a an active PUT request against the registration endpoint is required for the Client metadata to be updated. | Implementing a server-side cache policy for registration metadata ensures timely updates, keeping client data in line with what’s defined on the Participant Directory. |

| Issues on the registration journey might lead to client identifiers being created without the registration access_token being sent back, forcing manual recovery of tokens or removal of clients, raised using bi-lateral Service Desk tickets. | An unique, standardized client_id and the Trust Framework as the source of truth negates the need for client-side maintenance, streamlining the process. |

At the time of writing (12-2024), OpenID Federation is a relatively new specification, and the number of authorization servers that natively support it remains limited.

OAuth Dynamic Client Registration

OAuth Dynamic Client Registration (DCR) allows clients (applications) to register dynamically with an authorization server, automating much of the onboarding process. This approach is widely used in ecosystems where scalability and flexibility are priorities.

Key features include:

- Dynamic Onboarding: Applications can programmatically register with authorization servers without manual intervention, streamlining the onboarding process.

- Customizable Client Metadata: Participants can provide metadata about their applications, such as redirect URIs, contact information, and authentication method preferences.

- Security and Conformance: The governing organization can define security requirements, ensuring that only compliant clients are allowed to register.

What Types of Certificates and Key Types Will Be Used

The selection of certificates and key types is a cornerstone of a secure and interoperable ecosystem. Certificates play a pivotal role in enabling secure communications, authenticating participants and applications, and ensuring the integrity and confidentiality of data exchanges.

The choice which certificate and key types to use may be influenced by the selected security standard profile and its specific requirements.

Different profiles may mandate certain cryptographic algorithms, key lengths, or certificate attributes to meet their security and interoperability goals.

Certificate Types

In most ecosystems, certificates are categorized based on their intended use.

Server Certificates:

-

Transport Certificate: Used to establish mutual TLS (mTLS) channels for secure API communication, ensuring encrypted and mutually authenticated data exchange.

-

Signature Certificate: Ensures the authenticity and non-repudiation of server-issued messages by digitally signing payloads.

-

Encryption Certificate: Encrypts messages to ensure confidentiality during transmission.

Client Certificates:

-

Transport Certificate: Secures mTLS channels from the client side for encrypted and authenticated communication.

-

Signature Certificate: Used for client authentication via

private_key_jwtin OAuth flows and for signing payloads to ensure non-repudiation. -

Encryption Certificate: Encrypts messages sent by the client to maintain confidentiality.

Certificate Usage

Certificates are employed in various aspects of ecosystem operations:

-

mTLS (Mutual TLS): Provides mutual authentication and encryption between participants' applications.

-

Message Signing (JWS): Ensures authenticity and non-repudiation for data payloads exchanged between participants.

-

Message Encryption (JWE): Maintains confidentiality for sensitive data exchanged between participants.

-

Client Authentication: Enables client applications to authenticate with authorization servers using

tls_client_authandprivate_key_jwtOAuth client authentication methods.

Key Algorithms and Attributes

The following are commonly adopted standards for keys and certificate attributes:

-

Key Algorithms: RSA with 2048-bit or 4096-bit keys, depending on the security requirements of the ecosystem.

-

Message Digest Algorithms: SHA-256 for secure hashing.

-

Extended Key Usages (EKU): Attributes like

clientAuth,serverAuth, ordataEnciphermentdefine the specific purposes for which a certificate can be used.

Certificate Lifecycle Management

Ecosystems typically impose strict policies to ensure the ongoing validity and reliability of certificates:

-

Validity Period: Certificates often have a 12–13-month validity to ensure periodic renewal and updated cryptographic standards.

-

Revocation and Status Checking: Real-time revocation checks via OCSP or CRL are essential, with cached statuses refreshed every 15 minutes.

-

Renewal Policies: Certificates should be renewed at least one month before expiration to prevent service disruptions.

By standardizing these elements, ecosystems ensure a robust and secure foundation for all participants and applications.

What Certification Framework Is Needed If Any

The governing body of an ecosystem may determine that organizations and/or their technical resources—such as apps, APIs, or authorization servers—must be certified to participate in the ecosystem.

Certification serves as proof that the organization or its resources meet the requirements defined by the ecosystem, ensuring compliance, interoperability, and security.

An example of certification requirement included within a certification framework is the OpenID Foundation (OIDF), which enables deployments of OpenID Connect and the Financial-grade API (FAPI) to be certified against specific conformance profiles. This certification promotes interoperability among implementations, ensuring that different participants within an ecosystem can seamlessly communicate and exchange data according to standardized protocols.

For some ecosystems, certification by the OIDF may be sufficient to demonstrate compliance. However, Raidiam also offers a conformance suite that allows participants to execute tests implemented in line with the ecosystem standards, providing an additional layer of flexibility and control. This process empowers organizations to verify their conformance before seeking formal certification, helping to streamline their participation in the ecosystem.

Purpose of Certification

Certification provides a standardized mechanism to:

-

Verify compliance with the ecosystem’s technical and operational requirements.

-

Ensure that participants implement best practices for security and data exchange.

-

Foster trust among participants by providing assurances about the quality and reliability of services.

Examples from Open Banking Ecosystems

In an Open Banking ecosystem, certification can be applied to various components:

-

Authorization Servers:

-

Ecosystems often define specific security profiles for authorization servers to ensure secure data exchange. Certification validates that these servers adhere to the required security standards, such as support for mutual TLS (mTLS) or specific OAuth flows.

-

For example, certification might verify compliance with a security profile requiring strict client authentication mechanisms and robust token validation processes.

-

-

Data APIs:

-

Banks or financial institutions exposing APIs (e.g., payment initiation or account information APIs) may need to undergo certification to confirm they comply with ecosystem standards.

-

Certification ensures that all participating banks expose uniform API endpoints, with consistent behavior and data formats, simplifying integration for third-party developers and enhancing competition by reducing barriers to entry.

-

Certification Process and Governance

The certification process typically involves the following steps:

-

Specification Definition: The governing body defines the technical and operational requirements for certification.

-

Evaluation: Organizations or their resources undergo evaluation to confirm compliance with the specified requirements. This may include security testing, functional validation, and conformance checks.

-

Certification Issuance: Upon successful evaluation, a certification is issued, granting the entity permission to participate in the ecosystem.

-

Ongoing Monitoring: Certifications may require periodic renewal or monitoring to ensure continued compliance as standards evolve.

-

Participant Offboarding: If a participant fails to maintain compliance or can no longer meet the ecosystem's requirements—for instance, failing to guarantee the security of exchanged data—they may be offboarded. Offboarding includes revoking their certifications and disabling their technical access.

Key Considerations for Certification Frameworks

-

Flexibility: Certification frameworks should accommodate various types of participants and technical implementations.

-

Scalability: The framework must scale with the growth of the ecosystem, avoiding bottlenecks or excessive costs for new participants.

-

Transparency: Clear guidelines and accessible processes help foster adoption and trust among participants.

-

Interoperability: Certifications should align with international standards where possible to enable cross-border or cross-ecosystem collaborations.

By implementing a robust certification framework, ecosystems can ensure secure, reliable, and standardized interactions among participants, supporting the broader goals of openness, competition, and innovation.

What Is The Team Managing The Ecosystem

Raidiam enables governing bodies to delegate the configuration and management of the ecosystem (the underlying Trust Platform) to key roles, such as Super Users and Data Administrators. These roles are essential for administering and maintaining the ecosystem’s setup, onboarding and managing participants, and ensuring smooth operations during data exchanges.

Super Users

Super Users hold a unique position within the Raidiam Trust Platform, with comprehensive control over the entire ecosystem. They are responsible for the overall management and maintenance of the system, ensuring its optimal functioning, security, and stability. Super Users possess the ability to:

-

Oversee all organizations and users within the ecosystem.

-

Make critical decisions and adjustments to the ecosystem’s configuration.

-

Manage system-wide settings, configurations, and security protocols.

This level of authority is crucial for maintaining the integrity and reliability of the platform, ensuring a trustworthy and efficient environment for all participants.

Data Administrators

The Data Administrator is a user role within Raidiam, acting on behalf of the Trust Framework Administrator organization. Their primary responsibility is to manage data within the ecosystem, focusing on the onboarding and ongoing management of organizations participating in the data exchange.

While Data Administrators have extensive control over managing the data associated with the ecosystem, they do not have control over Reference Data, which is exclusively managed by Super Users. This division of responsibilities ensures that sensitive and foundational data is managed at the highest level of authority.

Support and Onboarding

While Raidiam handles the underlying Trust Platform and ensures its reliability, it is the responsibility of the governing body to provide support during onboarding and for general assistance not related to the technical functioning of the platform.

In most cases, a dedicated service desk should be created to enable participants to raise support tickets and receive assistance with non-technical inquiries or issues. This support framework helps ensure smooth and efficient onboarding and continued participation in the ecosystem.

What Types Of Participants Will Be Included In The Ecosystem

In Open or Closed Data ecosystems, participants play critical roles in the exchange and utilization of data. While the core participants typically fall into two categories, the ecosystem can designed to accommodate a diverse range of entities based on their roles, permissions, and the domains they are assigned to.

Core Participant Types

-

Data Providers

Data Providers are organizations that offer their data to other organizations within the ecosystem. Access to their data is often governed by user consent, ensuring compliance with privacy and regulatory standards.

- Dual Role Capability: Data Providers can also act as Data Receivers, requesting and consuming data from other organizations to enhance their own business operations or offerings.

-

Data Receivers

Data Receivers are organizations that consume data offered by Data Providers. They leverage this data to drive innovation, create new services, or improve existing operations.

Extending Beyond Core Roles

The ecosystem is not restricted to the Data Provider and Data Receiver roles. Its flexible design allows for additional types of participants based on the specific needs and goals of the governing body.

For example:

-

External Service Providers

Governing bodies may include external organizations to enhance the ecosystem's offering. These could include platforms for monitoring, analytics, or other services that do not directly provide or consume the ecosystem’s primary data. Such participants benefit from:

-

Enhanced Security: Secure integration within the ecosystem.

-

API Registration and Certification: The ability to register APIs for integration with ecosystem participants.

-

Single Sign-On (SSO): Leveraging Raidiam's SSO capabilities to enable ecosystem participants to access external platforms using their existing accounts stored within the ecosystem.

-

Certificate Provisioning: Access to ecosystem-managed certificates for authentication and secure communication.

-

By supporting diverse types of participants, ecosystems can foster collaboration, extend their value proposition, and provide flexibility for future innovation. The governing body defines how participants interact with the ecosystem, ensuring roles and permissions align with the ecosystem’s goals and policies.

What Authorities and Domains Exist Within the Ecosystem

The governance structure of an ecosystem relies on Authorities and Domains to organize, manage, and regulate participant activities. Together, they form a scalable and flexible framework that accommodates diverse operational needs and ensures effective oversight.

Authorities

Authorities are central to the governance of the ecosystem. They are responsible for accrediting participants, assigning Domains, and defining roles and permissions.

Key characteristics of Authorities include:

-

Operational Scope: While often associated with a specific country or region, Authorities can operate across national boundaries if required, enabling the ecosystem to support international collaboration.

-

Multiplicity and Collaboration: An ecosystem can include multiple Authorities, each contributing to the governance of specific sectors or domains. This multiplicity ensures diverse expertise and shared responsibility across the ecosystem.

Domains

Domains represent the highest level of categorization within the ecosystem, providing a primary framework for organizing activities and managing participants.

Key characteristics of Domains include:

-

Classification and Management: Domains group participants and activities into logical categories, simplifying ecosystem governance.

-

Customization and Flexibility: Domains can be tailored to reflect various operational areas without being tied to specific regional or country identifiers. This approach enhances global applicability and allows for seamless international integration.

-

Cross-Domain Participation: Organizations can participate in multiple domains.

For example, a bank naturally assigned to the Banking Domain could also be included in the Insurance Domain. This flexibility allows insurance providers to connect to the bank, retrieve account information, and initiate payments for insurance policies as needed.

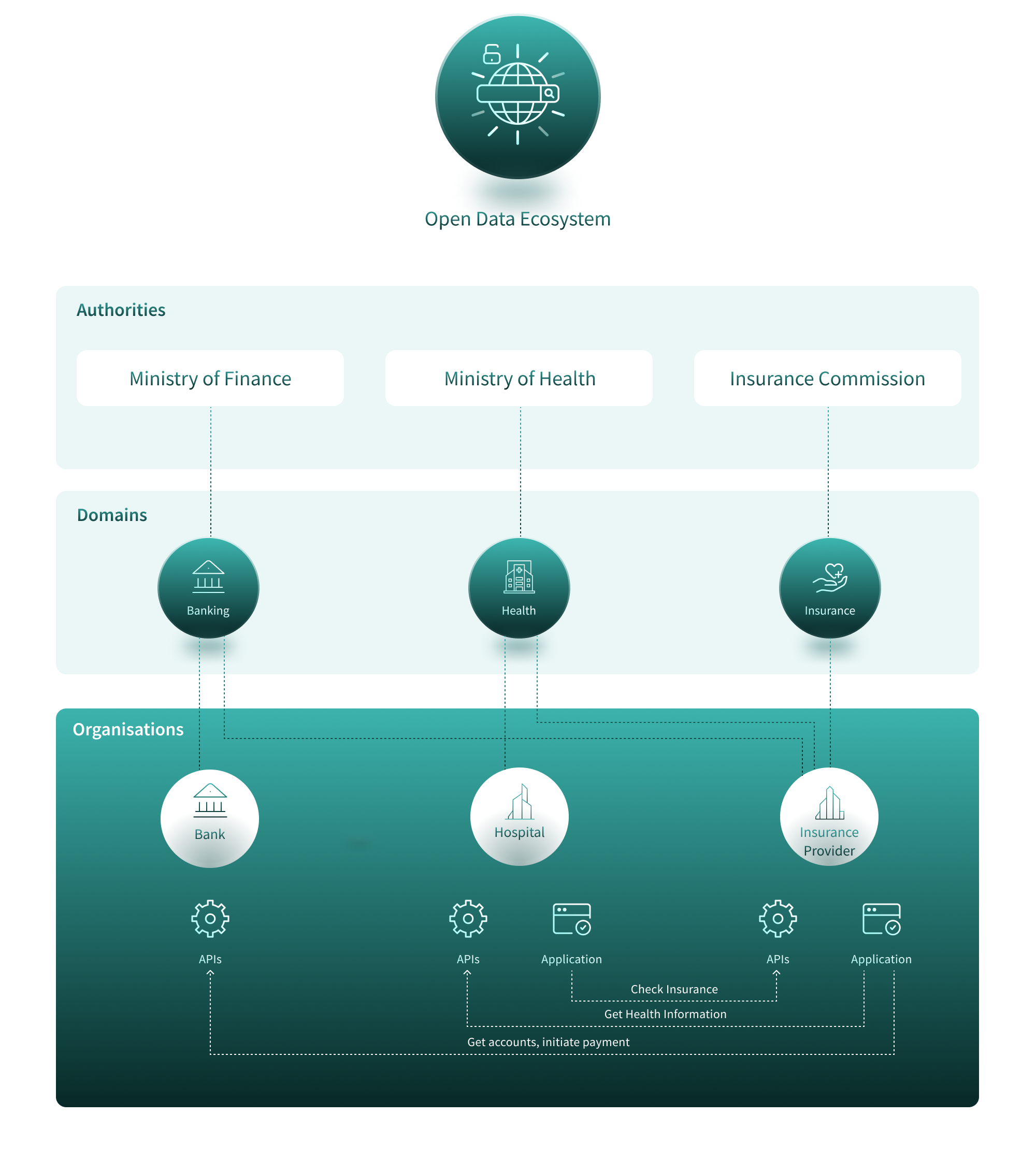

Example: A Multi-Sector Open Data Ecosystem

In a large Open Data ecosystem, multiple Authorities and Domains might coexist to govern and organize different sectors:

-

Authorities:

-

A Ministry of Finance to oversee banking and financial data-sharing activities.

-

A Ministry of Health to regulate health-related data exchanges.

-

A Insurance Commission to govern insurance-related data-sharing initiatives.

-

-

Domains:

-

A Banking Domain to manage participants and activities in the financial sector.

-

A Health Domain to classify and manage health-related data exchanges.

-

An Insurance Domain to handle insurance sector participants and activities.

-

This structure allows the ecosystem to maintain clarity and accountability across multiple sectors while fostering collaboration and scalability. By decoupling domains from regional or national identifiers, ecosystems can adapt to diverse use cases and operational needs, both locally and globally.

What Roles and Permissions are Available for Organisations and Resources

Roles, defined within each Domain, serve as the foundation for managing permissions and access in the ecosystem. They specify the functions or privileges granted to participants, ensuring a structured and secure operational framework.

Diversity and Specificity of Roles

Roles can range from general categorizations of participants (e.g., Data Provider, Data Receiver) to highly specific operational permissions (e.g., data access rights, API management capabilities). This flexibility allows the ecosystem to adapt to a wide range of requirements while maintaining control over participant activities.

Role Claims and Assignments

Roles are assigned based on Domain Role Claims, a mechanism that ensures clarity and regulation of each participant’s capabilities and permissions. These claims define the specific actions and access levels granted to participants within their assigned roles.

Role Claims are assigned by the Authority governing the Domain that contains the particular role being assigned.

Metadata and Access Control

Each Domain Role can be associated with specific OAuth metadata types. This metadata dictates the permissions and access levels tied to the role, enabling fine-grained access control within the ecosystem.

By associating roles with OAuth metadata, the ecosystem achieves:

- Granular Access Control: Ensuring that participants operate within the boundaries of their assigned roles and permissions.

- Enhanced Security: Limiting access to sensitive data and resources based on predefined scopes and claims.

OAuth Metadata Types in Raidiam

The following OAuth metadata types are used to define and control access for roles:

-

Grant Types:

-

Flows through which an application obtains an access token.

-

Examples: Authorization Code, Client Credentials, and Refresh Token.

-

Grant types are assigned based on the application’s trust level and user experience requirements.

-

-

OAuth Scopes:

-

Specify the access privileges being requested during authorization.

-

Define the extent of access an application has to a user’s data.

-

Examples:

read:accounts,write:transactions.

-

-

ID Claims (claim):

-

Pieces of information asserted about a user, included in ID Tokens within OpenID Connect.

-

Examples: User details like

name,email, orpreferred_username.

-

-

Response Types:

-

Determine the details returned from the initial authorization request.

-

Examples:

code(authorization code),token(access token), orid_token.

-

By leveraging these OAuth metadata types, the ecosystem ensures that roles and permissions are not only clearly defined but also rigorously enforced. This allows for secure, efficient, and scalable participant interactions within the ecosystem.

Sample Domain, Role, and Metadata Mapping

| Domain | Role | Technical Metadata Type | Technical Metadata value |

|---|---|---|---|

| PSD2 | PISP | scope | openid payments |

| PSD2 | PISP | grant_type | authorisation_code |

| Open Banking | DADOS | response_type | code id_token |

| Retail Banking | Data Provider | scope | make:payments |

| Commercial Banking | Data Receiver | grant_type | authorisation_code |

How Does The Onboarding Flow Look Like

An essential consideration for ecosystem design is defining the onboarding process for participants. Typically, onboarding is divided into two distinct processes:

-

Onboarding to the Sandbox Environment: Provides a safe testing environment where participants can integrate and validate their systems before moving to production.

-

Onboarding to the Production Environment: Allows participants to operate in the live ecosystem.

While the processes for both environments are generally similar, the production onboarding may include stricter checks and additional steps to ensure readiness and compliance.

Typical Onboarding Process

-

Access Request:

- A primary business contact or an authorized representative from the organization submits an access request via a service desk or request form.

-

KYC for the Individual:

- A Know Your Customer (KYC) process is conducted to verify the individual’s identity.

-

KYC for the Organization:

- The organization’s identity and credentials are verified.

-

Authorization Check:

- It is verified whether the individual has the authority to onboard the organization to the ecosystem.

-

Organization Creation:

- A new organization entry is created in the ecosystem, and domains and roles are assigned based on its intended participation.

-

User Addition:

- The individual is added to the organization as a user.

-

Registration Confirmation:

- The individual receives confirmation of registration and instructions for account creation.

-

Terms and Conditions Acceptance:

- During account creation, the user may be required to accept the ecosystem’s Terms and Conditions.

-

Director Approval:

- The user assigns organization directors to review and sign the Terms and Conditions required for the organization’s participation.

-

Terms Validation:

- The signed Terms and Conditions are validated by the ecosystem’s governing body.

-

User Role Assignment:

- The individual is promoted to Organization Administrator, granting them management permissions.

-

User Registration:

- The Organization Administrator registers additional users as needed.

Additional Onboarding Steps

Depending on the ecosystem’s requirements, additional steps may be needed, such as:

-

Certification of Authorization Server or Data APIs: Ensures compliance with ecosystem standards for secure data exchange.

-

Application Certification: Validates that participant applications meet required technical and operational standards.

-

Conformance Testing: If a conformance suite is available, participants must test their applications, authorization servers, or APIs against the suite to verify compliance.

Ongoing Monitoring

The onboarding process does not conclude with initial registration. Continuous monitoring is essential to ensure participants remain compliant with ecosystem standards. Monitoring activities may include:

-

Activity and Performance Tracking: Observing usage patterns and system performance.

-

Conformance Checks: Ensuring continued adherence to security and interoperability standards.

By designing a clear and structured onboarding flow, the ecosystem can streamline participant integration while maintaining the security, reliability, and compliance required for successful operation.

What Flags Will Be Needed To Distinguish Resources

Flags are labels or tags used to categorize technical resources within the Raidiam. Managed by Trust Framework Administrators (Super Users), Flags are part of the system’s Reference Data and serve as a flexible tool for organizing and managing entities across the ecosystem.

Flags provide a way to:

-

Group Resources: Organize Organisations, Applications, and Authorization Servers logically based on common characteristics.

-

Filter and Search: Enable administrators to quickly locate and manage resources.

-

Categorize and Identify: Label resources for easier tracking and management within the ecosystem.

Examples of Flags

Flags can be tailored to represent various attributes or classifications of resources. Below are some common examples:

-

Organization Type:

-

Bank

-

Payment Service Provider (PSP)

-

FinTech

-

Regulatory Body

-

Insurance Provider

-

-

Compliance Level:

-

PSD2 Compliant

-

GDPR Compliant

-

Open Banking Standard Certified

-

-

Organization Size:

-

Enterprise

-

SME

-

Startup

-

By leveraging Flags, the ecosystem gains a powerful tool to manage and organize resources efficiently, ensuring that resources are easily identifiable and aligned with the operational goals of the ecosystem.

Sample Ecosystems

For reference, you can check the following Open Data ecosystems and their design specifications:

-

Security Standards:

-

Trust Framework Guides:

Open Banking

Authority

In an Open Banking ecosystem, the Authority might be a financial regulatory body such as Ministry of Finance or others. An example can be the UK Financial Conduct Authority (FCA). This Authority is responsible for accrediting participants and ensuring compliance with relevant regulations.

Domains

The Authority might establish Domains to represent distinct areas of the financial services ecosystem. Examples include:

-

Retail Banking: Encompasses services provided to individual consumers, such as account information, personal loans, and mortgages.

-

Commercial Banking: Focuses on services for businesses, such as corporate accounts, loans, and cash management.

-

Payments: Covers payment initiation services and the processing of transactions.

-

Lending: Includes credit provisioning, such as loans and credit card services.

Roles

Within these domains, specific roles define the functions and permissions of participants. Examples include:

-

Retail Banking Domain

-

Data Provider (Bank): Provides account information and transaction data upon user consent.

-

Data Receiver (Third-Party Providers, Banks): Retrieves user-authorized data to offer personal finance management tools or credit assessments.

-

-

Commercial Banking Domain

-

Corporate Data Provider: Offers corporate account data and financial reports.

-

Corporate Data Receiver: Accesses data for enterprise tools like ERP systems or corporate credit underwriting.

-

Treasury Manager: Manages cash flows and liquidity for corporate clients using ecosystem tools.

-

-

Payments Domain

-

Payment Initiation Service Provider (PISP): Initiates user-authorized payments on behalf of other participants.

-

Payment Processor: Manages the backend for payment transaction clearing and settlement.

-

Fraud Monitor: Monitors payment activity for fraudulent transactions and flags potential risks.

-

-

Lending Domain

-

Loan Provider: Offers lending services such as personal or business loans.

-

Loan Aggregator: Collects and compares loan offers from multiple providers.

-

Credit Scorer: Evaluates creditworthiness using ecosystem data.

-

By structuring the Open Banking ecosystem into well-defined domains with clear roles, participants can operate efficiently, securely, and in compliance with regulatory requirements.

Open Health

Authority

The Authority in an Open Health ecosystem might be a national health authority (e.g., the Ministry of Health) or a specialized healthcare data governance body, such as a National Health Data Agency. This Authority is responsible for accrediting participants, defining regulations for data sharing, and ensuring compliance with privacy and security standards.

Domains

The Authority may establish Domains to represent distinct operational areas within the health ecosystem. Examples include:

-

Patient Data Management: Covers the storage, protection, and regulated access to patient records.

-

Healthcare Provider Services: Encompasses interactions between providers, such as referrals, care coordination, and health service documentation.

-

Medical Research: Facilitates the sharing of health data for research purposes while adhering to strict privacy standards.

-

Public Health Monitoring: Focuses on the collection and analysis of population health data to guide policy decisions and responses to health crises.

Roles

Within these domains, specific roles define the responsibilities and permissions of participants. Examples include:

-

Patient Data Management Domain

-

Data Custodian: Responsible for securely storing and managing patient data, ensuring compliance with regulations.

-

Data Accessor: Includes healthcare providers, such as doctors and nurses, who access patient records for diagnosis and treatment.

-

-

Healthcare Provider Services Domain

- Referral Provider: Facilitates referrals between healthcare providers to ensure continuity of care.

- Care Coordinator: Manages and oversees patient care plans across multiple providers.

-

Medical Research Domain

-

Research Entity: Organizations conducting medical research using anonymized or pseudonymized health data.

-

Data Contributor: Institutions, such as hospitals and biobanks, that supply data for research purposes.

-

-

Public Health Monitoring Domain

-

Epidemiologist: Uses health data to track and analyze disease trends.

-

Health Policy Maker: Leverages aggregated health data to inform policy decisions and interventions.

-

Incident Manager: Handles public health emergencies, such as disease outbreaks, by coordinating responses based on real-time data.

-

By organizing the Open Health ecosystem into clear domains with well-defined roles, participants can collaborate effectively while ensuring compliance with privacy, security, and ethical standards.

Global Open Data Federation

The Global Open Data Federation is a fictional yet illustrative example of how a large-scale network of federations can facilitate secure and trusted data sharing at a global level.

Using Raidiam’s capabilities and the concept of OpenID Federation, this ecosystem demonstrates the potential for enabling seamless trust and interoperability across diverse regions and sectors.

Structure of the Global Federation

The structure outlined below is fictional and provided solely for illustrative purposes to demonstrate the potential of a global federated data-sharing network.

The federation is organized hierarchically, connecting regional and sector-specific federations into a unified global network:

-

Global Open Data Federation

-

Open Data Brasil

-

Open Banking Brasil

-

Open Insurance Brasil

-

-

Open States

-

Financial Data Exchange

-

Healthcare +

-

-

Consumer Data Right (CDR)

-

Open Banking

-

Open Energy

-

-

And more...

-

This structure highlights how federations can represent different regions, industries, and initiatives while remaining part of a larger, interoperable network.

The Role of OpenID Federation in Enabling Trust

The key to such a global network lies in the OpenID Federation standard, which allows for the establishment of trust between participants without requiring direct registration at each authorization server.

In simplification, OpenID Federation relies on:

-

Trust Chain: A trust chain is created, linking the entity requesting access to resources (Data Receiver), the federation, and the entity offering its data (Data Provider).

-

Dynamic Trust Building: By following the chain upward, organizations that were previously unaware of each other’s existence can establish trust dynamically.

-

Data Exchange: Once trust is established, these organizations can securely exchange data without manual pre-registration processes.

Example Use Case

Consider a financial institution in Open Banking Brasil needing to access data from a financial institution in Open Banking (Australia) under the Consumer Data Right initiative:

-

The institution’s authorization request initiates a trust chain validation.

-

The trust chain links the institution through Open Banking Brasil Federation, Open Data Brasil, and up to the Global Open Data Federation, and down to the Consumer Data Right Federation and Open Banking provider in Australia.

-

The validated trust chain enables secure data exchange between the two participants, even though they are in different regions and sectors.

Key Benefits of a Global Open Data Federation

-

Interoperability: Organizations from different federations can securely share data across sectors and geographies.

-

Efficiency: Trust chains eliminate the need for manual registrations at multiple authorization servers, streamlining interactions.

-

Scalability: The hierarchical structure accommodates new federations and participants without disrupting the existing network.

-

Security: OpenID Federation ensures robust authentication and authorization mechanisms at every step of the trust chain.

By leveraging Raidiam’s platform and the principles of OpenID Federation, a Global Open Data Federation can transform how data is shared and trusted on a global scale, unlocking new opportunities for collaboration and innovation.